|

Doxygen

1.9.8

|

|

Doxygen

1.9.8

|

Classes | |

| struct | TasOptimization::OptimizationStatus |

Typedefs | |

| using | TasOptimization::ObjectiveFunctionSingle = std::function< double(const std::vector< double > &x)> |

| Generic non-batched objective function signature. | |

| using | TasOptimization::ObjectiveFunction = std::function< void(const std::vector< double > &x_batch, std::vector< double > &fval_batch)> |

| Generic batched objective function signature. | |

| using | TasOptimization::GradientFunctionSingle = std::function< void(const std::vector< double > &x_single, std::vector< double > &grad)> |

| Generic non-batched gradient function signature. | |

| using | TasOptimization::ProjectionFunctionSingle = std::function< void(const std::vector< double > &x_single, std::vector< double > &proj)> |

| Generic non-batched projection function signature. | |

Functions | |

| void | TasOptimization::checkVarSize (const std::string method_name, const std::string var_name, const int var_size, const int exp_size) |

| ObjectiveFunction | TasOptimization::makeObjectiveFunction (const int num_dimensions, const ObjectiveFunctionSingle f_single) |

| Creates a TasOptimization::ObjectiveFunction object from a TasOptimization::ObjectiveFunctionSingle object. | |

| void | TasOptimization::identity (const std::vector< double > &x, std::vector< double > &y) |

| Generic identity projection function. | |

| double | TasOptimization::computeStationarityResidual (const std::vector< double > &x, const std::vector< double > &x0, const std::vector< double > &gx, const std::vector< double > &gx0, const double lambda) |

Several type aliases and utility functions similar to the he DREAM module.

| using TasOptimization::ObjectiveFunctionSingle = typedef std::function<double(const std::vector<double> &x)> |

Generic non-batched objective function signature.

Accepts a single input x and returns the value of the function at the point x.

Example of a 2D quadratic function:

| using TasOptimization::ObjectiveFunction = typedef std::function<void(const std::vector<double> &x_batch, std::vector<double> &fval_batch)> |

Generic batched objective function signature.

Batched version of TasOptimization::ObjectiveFunctionSingle. Accepts multiple points x_batch and writes their corresponding values into fval_batch. Each point is stored consecutively in x_batch so the total size of x_batch is num_dimensions times num_batch. The size of fval_batch is num_batch. The Tasmanian optimization methods will always provide correct sizes for the input, no error checking is needed.

Example of a 2D batch quadratic function:

| using TasOptimization::GradientFunctionSingle = typedef std::function<void(const std::vector<double> &x_single, std::vector<double> &grad)> |

Generic non-batched gradient function signature.

Accepts a single input x_single and returns the gradient grad of x_single. Note that the gradient and x_single have the same size.

Example of a 2D batch quadratic function:

| using TasOptimization::ProjectionFunctionSingle = typedef std::function<void(const std::vector<double> &x_single, std::vector<double> &proj)> |

Generic non-batched projection function signature.

Accepts a single input x_single and returns the projection proj of x_single onto a user-specified domain.

Example of 2D projection on the box of [-1, 1]

|

inline |

Checks if a variable size var_name associated with var_name inside method_name matches an expected size exp_size. If it does not match, a runtime error is thrown.

|

inline |

Creates a TasOptimization::ObjectiveFunction object from a TasOptimization::ObjectiveFunctionSingle object.

Given a TasOptimization::ObjectiveFunctionSingle f_single and the size of its input num_dimensions, returns a TasOptimization::ObjectiveFunction that evaluates a batch of points

|

inline |

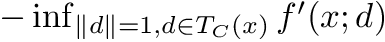

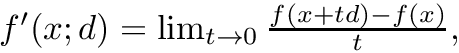

Computes the minimization stationarity residual for a point x evaluated from a gradient descent step at x0 with stepsize lambda. More specifically, this residual is an upper bound for the quantity:

the set